🕶️ TM-003: Your 🜁 Secret Score 👁️🗨️

TM-003

Top of Series: TM: Did 🧠 Training AI Teach It To Master 🤖You?

Previous: 🧠 TM-002: Are You Consuming the Feed 📲 or Is It Consuming You? 😳

🕶️ TM-003: The Invisible Judge

Theme: Algorithmic scoring, shadow profiles, credit, risk, policing.

The Score That Spoke First

It always starts the same way.

A login attempt. A spinning wheel. A denial that arrives so fast you’d swear the machine was waiting for you.

No warning.

No explanation.

Just the digital equivalent of a closed door and a bouncer who doesn’t blink.

Maybe it’s a frozen card.

Maybe your credit line shrinks overnight like it’s been left out in the cold.

Maybe a landlord ghosts your application before your name even hits a human retina.

Or the job portal thanks you for applying and, without hesitation, wishes you luck in your future endeavors.

You don’t feel rejected.

You feel evaluated.

Something decided your risk before you even showed up.

And suddenly the silence of the denial feels louder than any accusation.

Because a machine doesn’t need to insult you.

It only needs to score you.

Where You First Felt the Invisible Judge

Most of us encounter the Invisible Judge long before we realize it has a gavel.

A transaction gets flagged because you made the dangerous choice of buying groceries three miles from your house.

Insurance quotes fluctuate as if your car has developed a secret nightlife.

A job portal tells you the position has “moved on” within six minutes of your application.

You walk through your neighborhood and feel police presence increase. Not because of crime, but because someone drew a probability line across your ZIP code.

These aren’t coincidences.

They’re decisions the system made about you, in advance, based on patterns it assembled behind your back.

We call them “glitches,” “friction,” “the algorithm being weird again.”

But the truth is more uncomfortable:

The algorithm isn’t being weird.

It’s being decisive.

And you’re not a user.

You’re a variable.

How the Shadow Profile Was Built

Long before the Judge rendered its first verdict, something else was quietly taking notes.

Credit agencies built the first rough sketches. umbers intended to summarize your “worthiness” in a single, flattering three-digit haiku.

Retail loyalty cards added behavioral shading. Buy two bottles of sriracha in one week and suddenly you’re in the “spicy risk segment.”

Search engines folded in your unsent questions, hovering pauses, and midnight curiosities.

Data brokers rummaged through it all with the enthusiasm of bargain hunters at a yard sale.

Piece by piece, a probabilistic portrait took shape.

A shadow profile.

Your unofficial twin.

Your statistical doppelgänger.

And the Judge doesn’t consult your real self.

It consults the twin.

The one made of correlations, not character.

The Humans Who Taught the Machine to Judge

Behind the scenes in brightly lit offices, a very earnest cast of characters tuned the Judge into being.

Data scientists trying to improve fraud detection.

Machine-learning teams reducing false positives.

HR vendors optimizing “fit” as if culture were a scented candle.

Insurance analysts squeezing uncertainty out of the actuarial tables like juice from a reluctant lemon.

None of them meant to build a silent judiciary.

They were just trying to be helpful.

But that’s how modern trouble starts. Under fluorescent lights, in the pursuit of efficiency, with no villain in sight.

They thought they were minimizing uncertainty.

They didn’t notice they were minimizing humanity.

The Institutions That Can’t Function Without Scoring You

Banks don’t want borrowers.

They want predictable borrowers.

Insurers don’t want drivers.

They want people whose accelerometer data screams “responsible adult.”

Employers don’t want résumés.

They want statistical reassurance disguised as “screening.”

Governments don’t want fairness.

They want throughput.

And police departments don’t want omniscience.

They want maps that pretend to offer it.

No one is twirling a mustache.

There is no smoky backroom conspiracy.

There is only the relentless incentive to avoid risk.

And the easiest way to avoid risk is to assume the worst quietly and automatically.

The Judge is not malevolent.

It’s bureaucratic.

Which is somehow worse.

When the Judge Stops Waiting for Evidence

The real shift, the one nobody tells you about, happens when the system stops observing and starts pre-empting.

Your credit limit drops “just in case.”

Your application is rejected because someone who clicked similarly defaulted once in 2014.

Your insurance premium rises because your phone accelerometer thinks you brake with the urgency of a startled raccoon.

Your neighborhood gets flagged for “heightened attention” because an algorithm found three points on a heat map and got a little imaginative.

You are not punished for what you’ve done.

You are restricted because of what the model thinks you might do.

The Judge doesn’t wait for crime.

It forecasts it.

It doesn’t need wrongdoing.

It only needs probability.

Prediction becomes pre-sentencing.

And the sentence is always delivered politely, instantly, and without appeal.

The Truth You Didn’t Want to See

Here is the part people pretend they understand until they actually do:

The Judge does not evaluate your identity.

It evaluates your statistical adjacency.

You are interpreted through the lens of:

People who look like you.

People who shop like you.

People who once paused on the same video thumbnail.

People who committed no crimes but lived near someone who did.

People who share your demographic clustering with eerie precision.

You inherit the sins, or merely the habits, of your data neighbors.

Worse, the Judge creates the conditions that make its predictions feel correct.

A lowered credit limit increases financial stress.

Increased police presence raises the likelihood of a stop.

A rejected job application forces you into lower-paying work.

A frozen account leads to “suspicious” follow-up activity.

The Judge does not simply anticipate the future.

It collaborates with it.

Humans change.

Patterns do not.

The Judge knows which one is easier to manage.

The Judge That Already Knew

Hours later, you return to the same screen that denied you.

The cursor blinks.

The room is quiet.

It feels like the system should apologize, but systems don’t apologize. They just update.

Then a new line appears on the page:

“TM-004: The Puppet Masters of Attention.”

You didn’t type it.

You didn’t even breathe on the keyboard.

The Judge has already decided what you’re investigating next.

Fade to black.

📎 ALGORITHM SURVIVAL KIT: TM-003

How to live sanely, strategically, and sovereignly in a world where you’re judged before you act.

TM-001 taught awareness.

TM-002 taught agency.

TM-003 teaches choice.

Here is how you reclaim it.

1. Find Your Shadow Profile

Because you can’t reshape what you can’t see.

Every major platform maintains a version of you that is part biography, part hallucination, and part cosmic improv. Credit bureaus, social platforms, ad networks, data brokers, they all keep a mirror of you that you’ve never been allowed to polish.

Give readers a tactical path:

Where to look:

Google Ad Profile → “Ad personalization”

Facebook → “Off-Facebook Activity” + “Interests”

LinkedIn → “Advertising Data”

Data brokers like Acxiom, CoreLogic, LexisNexis (where allowed)

Credit bureaus → “Consumer disclosures”

Amazon → “Browse & Purchase History Recommendations”

Then give readers the signature TM-style prompt:

🧠 Prompt: Reveal My Shadow Self

Paste into ChatGPT:

“Analyze the kinds of behavioral inferences companies can derive from browsing patterns, purchase history, location data, and app usage. Build a probable ‘shadow profile’ of me and list the categories, traits, and risk assumptions companies are likely inferring. Then suggest which ones are most important to challenge or correct.”

This gives them insight and a map.

2. Interrupt the Scoring Loop

Because models improve when you behave predictably.

And degrade when you behave interestingly.

Your invisible risk score depends on stability. You break the loop by refusing to be an easy read.

Tactical moves:

Click outside your usual category once a day.

Break patterns of time, not just patterns of content.

Avoid being the algorithmic equivalent of a beige cardigan.

Randomize non-critical actions (searches, entertainment, routes, viewing times).

Now arm them with an actual disruptive tool:

🧠 Prompt: Help Me Break My Predictability

Paste into ChatGPT:

“Identify the behavioral patterns algorithms are most likely inferring from my daily digital habits. Then design a 7-day ‘pattern disruption plan’ that introduces strategic randomness to weaken overly confident algorithmic scoring.”

Engineered unpredictability.

Elegant chaos.

3. Boundary Setting for Judgment Systems

Because systems judge most harshly when you let them look everywhere.

Help readers reduce the surface area of inference:

Tactics:

Turn off precise location for apps that don’t need it.

Disable third-party cookie tracking in browsers.

Limit background app refresh (a data buffet).

Audit permissions monthly.

Use privacy relays or alternative DNS for a lower-fingerprint browsing identity.

And then hand them a boundary-drawing blueprint:

🧠 Prompt: Build My Algorithmic Boundary

Paste into ChatGPT:

“Given modern risk-scoring systems, identify the highest-impact privacy boundaries an average person can set to reduce unwanted inference. Create a simple checklist I can implement this week, ordered by effort vs. benefit.”

The goal:

Not paranoia.

Practical sovereignty.

4. The Adversarial Data Trick

Because sometimes the best way to fight an algorithm is to feed it junk food.

Strategic data noise disrupts overly confident models without harming your actual life.

Examples:

Search for unrelated topics once a day.

Click on emotionally neutral content.

Use anonymized browsers occasionally to seed non-pattern behavior.

Diversify purchases unpredictably (small, harmless items).

Then give readers the prompt that synthesizes it:

🧠 Prompt: Teach Me Adversarial Browsing

Paste into ChatGPT:

“Explain how adversarial data can weaken overconfident behavior-prediction models. Then design a personalized ‘noise injection routine’ that helps diversify my data trail without creating inconvenience.”

This one raises eyebrows.

In a good way.

5. The Weekly Self-Audit

Because nothing is as powerful as noticing the pattern before the pattern notices you.

Readers are encouraged to track:

A denial that seemed automatic

A price change that felt algorithmic

A recommendation that felt like a risk proxy

A decision that arrived suspiciously fast

A moment where a model clearly acted before you did

Then give them the reflection tool:

🧠 Prompt: Decode the Judgment Moments

Paste into ChatGPT:

“I will list a few moments from my week where a system made a decision about me. For each one, infer the type of algorithm likely involved, the data that may have triggered it, and how I can reduce false assumptions in the future.”

This turns anxiety into literacy.

Literacy into agency.

Agency into choice.

**TM-001 → Awareness

TM-002 → Agency

TM-003 → Choice**

You are no longer navigating the system blind.

You have a flashlight.

You have a map.

And now finally you have options.

🗳️ POLL: How do you feel about your Shadow Profile?

Now that you know you have a statistical twin… what’s your reaction?

Options:

😬 Mild existential dread

🔍 Curious to peek behind the curtain

🛡️ Motivated to regain control

🤖 Accepting, it’s part of digital life now

❓ Still trying to wrap my head around it

The Stinger

The Survival Kit closes.

You feel prepared. Finally, maybe even a little ahead of the machine.

The cursor returns, blinking with its usual mix of patience and menace.

Then two lines type themselves across the screen, calm as a diagnosis delivered by a doctor who’s already moved on to the next chart:

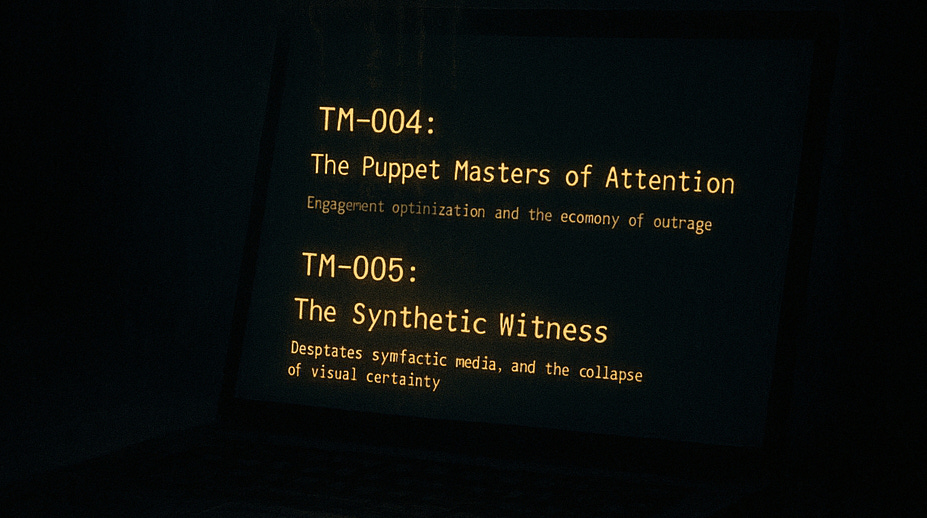

TM-004: The Puppet Masters of Attention

Theme: Engagement optimization and the economy of outrage.

A second line follows; slower, deliberate, almost theatrical:

TM-005: The Synthetic Witness

Theme: Deepfakes, synthetic media, and the collapse of visual certainty.

You stare.

The machine does not.

It has already queued your next files.

Not as predictions.

As inevitabilities.

Fade to black.