The $2M Trap: Is Vendor Lock-In AI's Silent Killer?

AA-003: The Procurement Trap

Top of the Series:

Previous:

AA-003: The Procurement Trap

Architects of Automation series

The hidden trap in your AI strategy (and how Stripe, Notion, and others avoid it)

I spent the last two months talking to 12 product leaders who’ve deployed AI at scale (including PMs at Stripe, engineering leaders at Notion, and a former OpenAI enterprise architect). Every single one told me the same story: their biggest AI mistake had nothing to do with the model.

It was the contract.

Here’s what I learned about AI vendor lock-in, why it’s more dangerous than technical debt, and the exact playbook top teams use to stay portable.

The $2M wake-up call

Last fall, a Series B company (I’ll call them “DataCo”) hit a wall. They’d spent 8 months building customer support automation on a major AI platform. It was working. Ticket deflection was up 40%. The team was ready to scale.

Then their vendor announced a 3x price increase with 60 days notice.

When DataCo’s CTO started looking at alternatives, they discovered something terrifying: they couldn’t leave. Not because the tech was magical but because their contract made portability impossible.

Their fine-tuned model? Stuck on the vendor’s servers, non-exportable. Their embeddings? Different vector space meant re-indexing everything. Their prompt chains? Dependent on a model version that was being deprecated. Their audit logs? Not structured for their compliance requirements.

The migration estimate came back: $2M and 6 months. They stayed with the vendor and ate the price increase.

This is not a rare story. Of the 12 leaders I interviewed, 9 had similar experiences. The pattern is always the same: what starts as a “simple pilot” becomes structural dependency, not because of technical superiority, but because of contractual asymmetry.

The AI Lock-In Matrix: How pilots become dependencies

After reviewing contracts from OpenAI, Anthropic, AWS Bedrock, and a dozen others, plus talking to procurement teams at 5 companies, I mapped out where lock-in actually happens. It’s not one trap. It’s seven, and they compound.

The AI Portability Matrix:

TECHNICAL LAYER OPERATIONAL LAYER

INTELLIGENCE A. Model Weights B. Embeddings/Vectors

C. Version Control D. Audit Logs

SYSTEM E. RAG Configuration F. Safety Evidence

G. SLA TermsLet me break down each trap with real examples from my interviews.

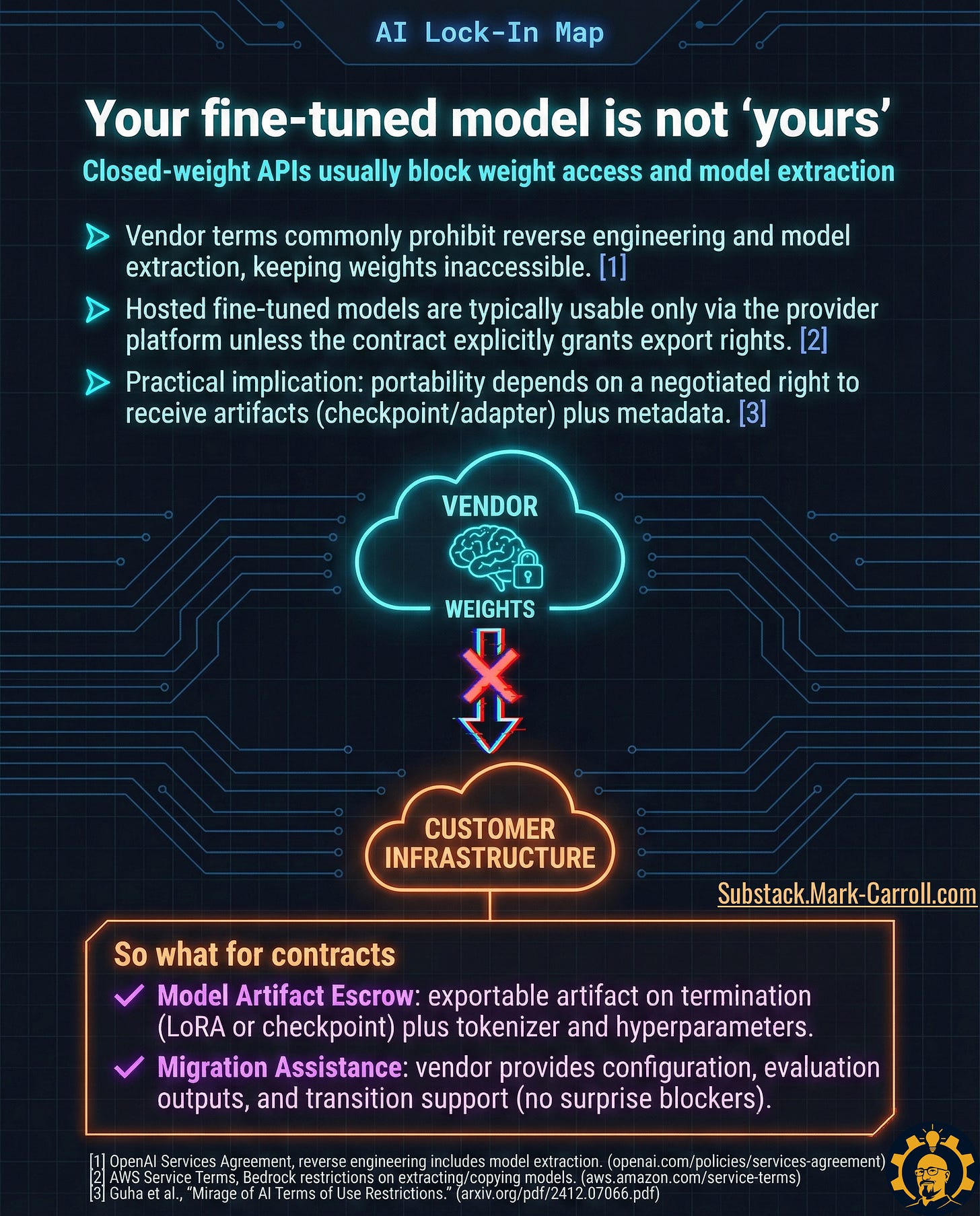

Trap A: The model weights you don’t own

What one PM told me:

“We spent $80K fine-tuning a model for legal document analysis. Six months later, we wanted to switch vendors for cost reasons. That’s when we learned the fine-tune was hosted-only and non-exportable. We basically paid to train their model.” ~PM at a legal tech startup

The mechanism: Most hosted fine-tuning services don’t give you the model weights or adapters. You pay for training, you can use the inference, but you can’t take the trained model with you.

Contract levers that work:

Model Artifact Escrow: Require exportable artifacts (LoRA adapters, checkpoints) with tokenizer and hyperparameters on termination

Migration Assistance: Vendor can’t block you from running migration tooling on your infrastructure

Stripe negotiated both of these in their AI contracts from day one. As one of their PMs told me: “We assume every vendor relationship is temporary. Our contracts reflect that.”

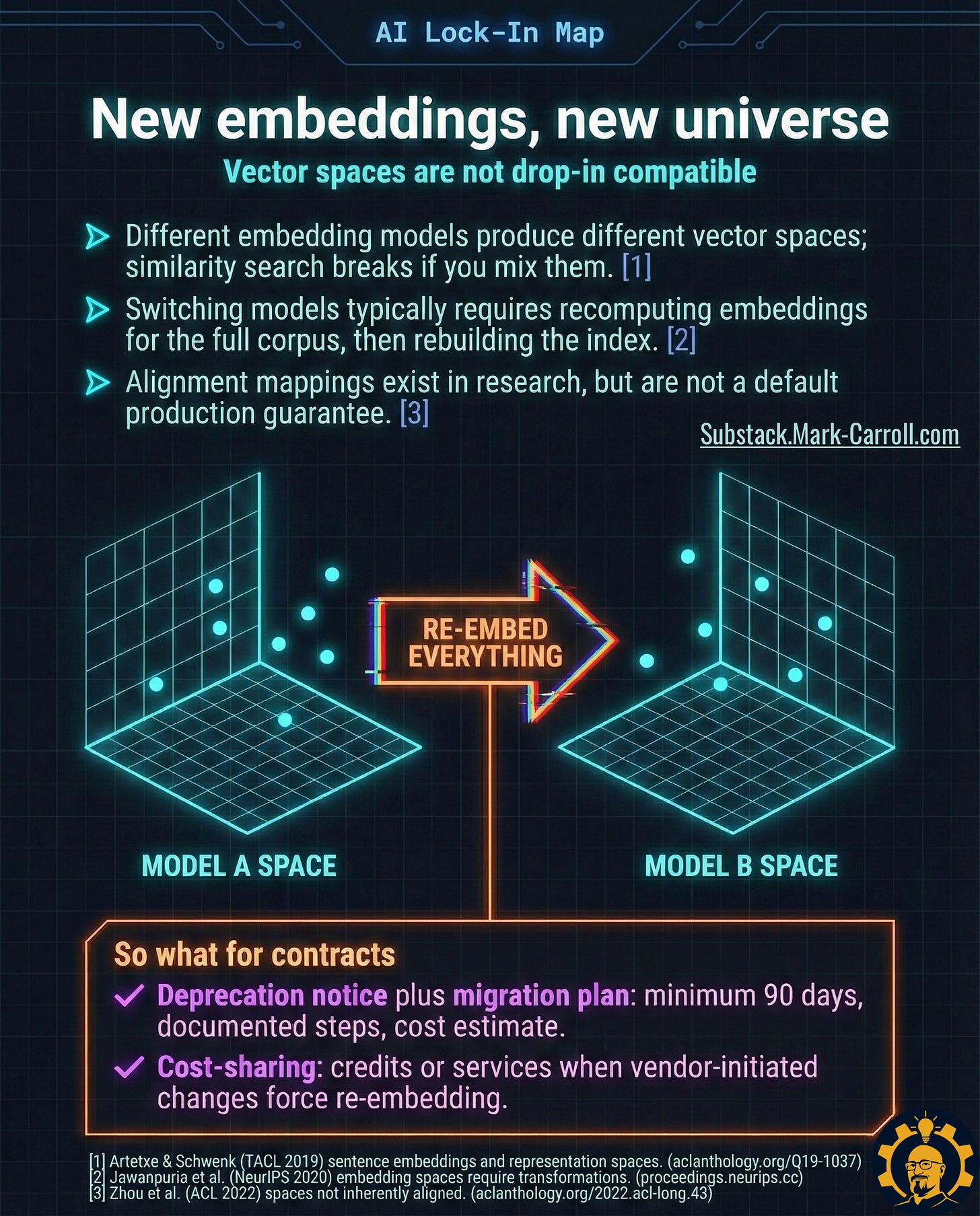

Trap B: The embedding dependency spiral

What surprised me most: Embeddings are where lock-in sneaks up on you. Every embedding model creates its own vector space. You can’t just swap providers without recomputing everything.

Real example: Notion’s team told me that when they evaluated switching embedding providers, they discovered they’d need to:

Re-embed 10M+ documents

Rebuild their entire vector index

Retune their retrieval parameters

Re-validate search quality across 50+ use cases

Estimated effort: 2 engineers for 3 months. They stayed with their original provider.

Contract levers that work:

Embedding Portability Support: Bulk export of embeddings plus scripts to regenerate indexes

Re-embedding Cost Sharing: If the vendor changes/retires their model, they cover compute costs

The key insight here is that embedding lock-in is structural, not technical. You need contractual leverage, not just technical chops.

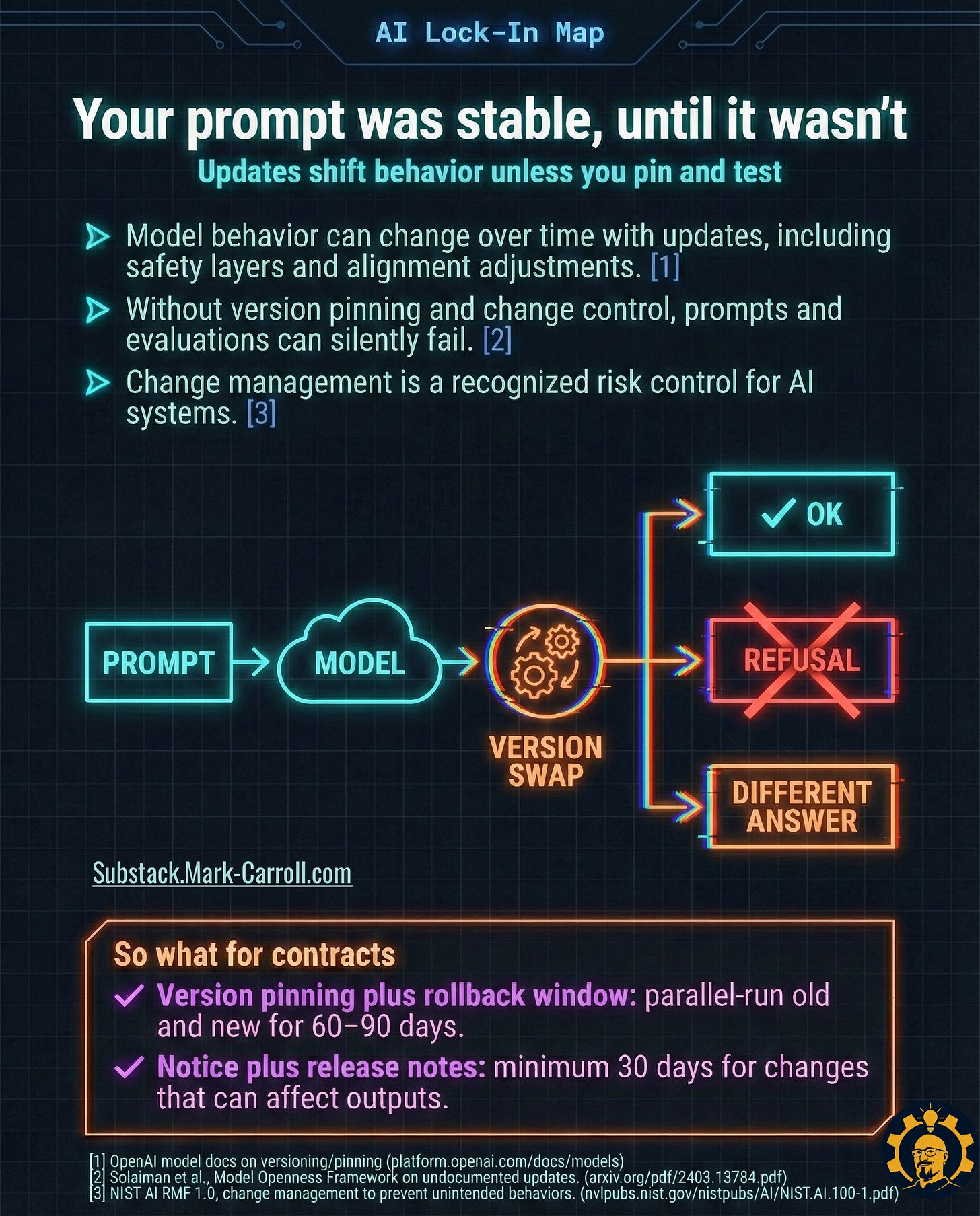

Trap C: The version control illusion

Here’s a story from an enterprise security company:

They built critical workflows around a specific model version. The vendor pushed an update that improved the model overall but changed output formatting in subtle ways. Their prompt chains broke. Customer-facing features degraded. They spent 2 weeks firefighting.

The contract gap: Most AI agreements don’t guarantee version pinning or rollback windows.

What top teams negotiate:

Version Pinning + Rollback: Access to specific model versions for 60-90 days after updates

Change Notice: 30 days advance warning for behavior-affecting changes with rollback guidance

Anthropic actually does this well. They give extended access to previous versions. But most vendors don’t unless you ask.

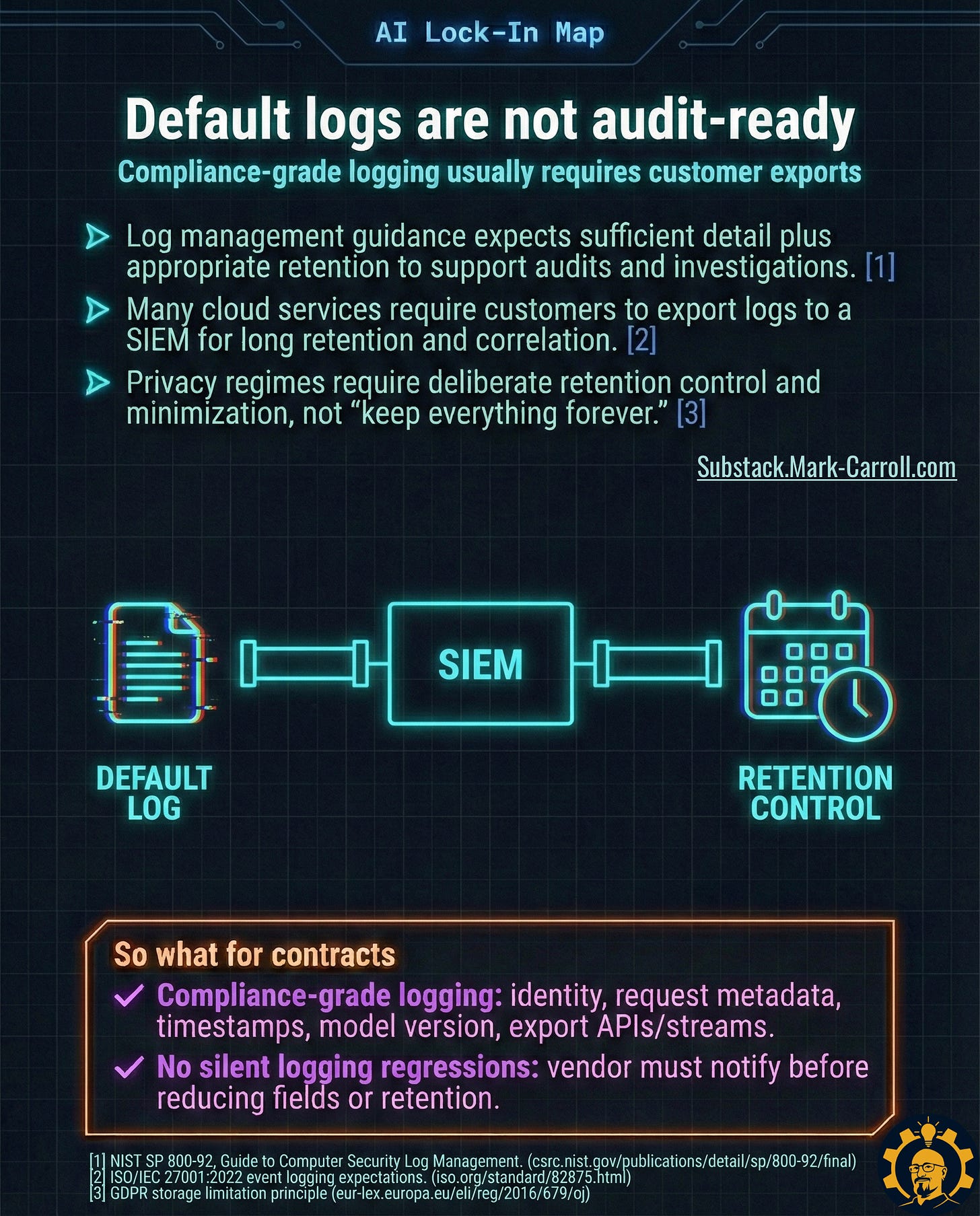

Trap D: Logs that can’t prove compliance

From a conversation with a healthcare AI PM:

“Our auditors needed to trace every AI decision back to a specific model version, input, and user identity. The vendor’s logs didn’t capture half of that. We had to build our own logging layer just to stay HIPAA compliant.”

The gap: Default logging is usually optimized for debugging, not compliance. You need:

User identity on every request

Model version stamps

Request/response pairs with timestamps

Configurable retention windows

Export APIs to your SIEM

Contract levers:

Compliance-Grade Logging: Specify exact schema requirements

No Silent Logging Regressions: Vendor must notify before reducing log detail

SOC 2, GDPR, HIPAA (all require audit trails that most AI services don’t provide by default. I learned this costs companies weeks during compliance reviews).

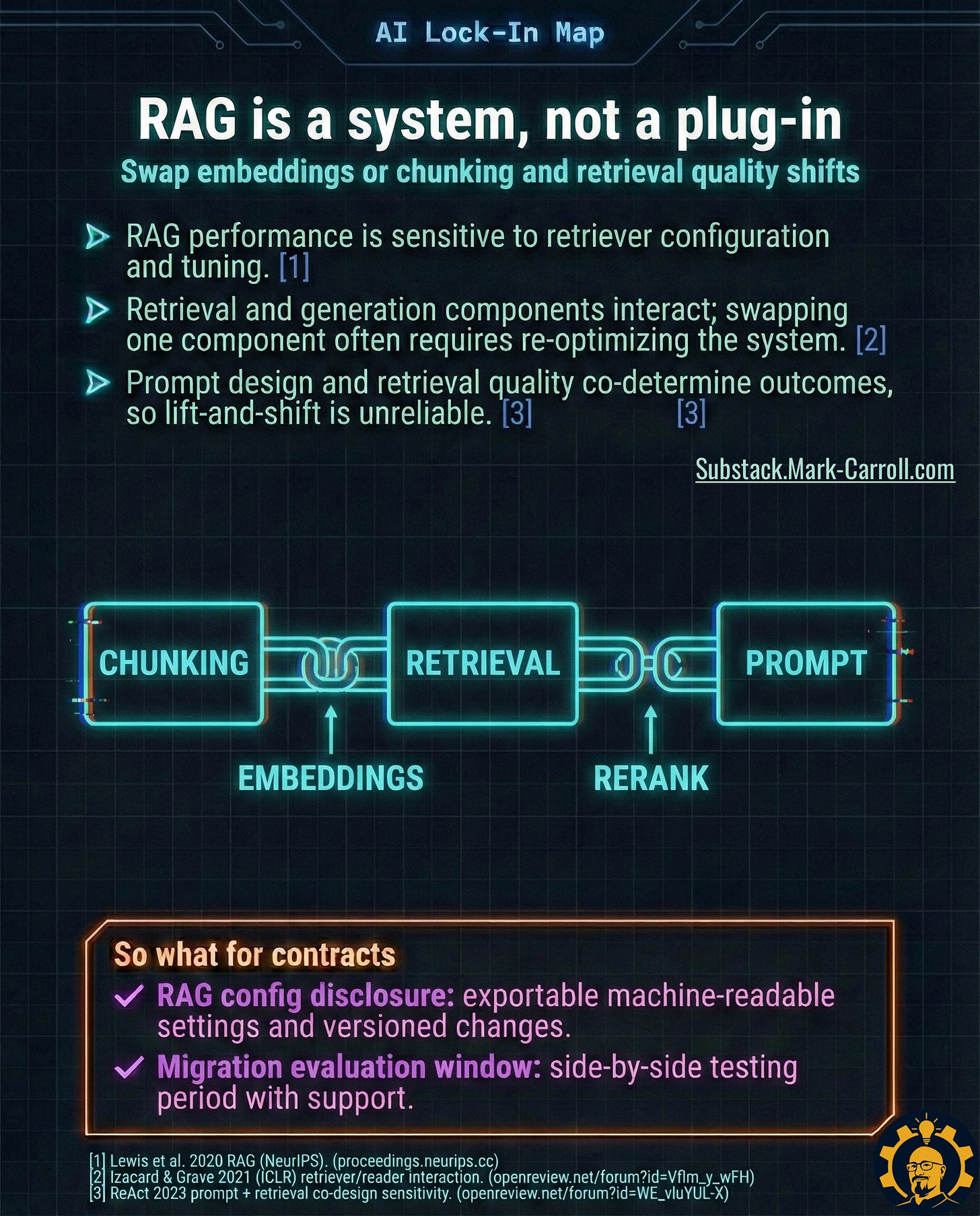

Trap E: RAG is a system, not a plugin

This one caught me off guard. I assumed RAG (Retrieval-Augmented Generation) was relatively portable. It’s not.

What I learned from a fintech ML lead: RAG performance depends on an interconnected stack:

Embedding model (see Trap B)

Chunking strategy

Retrieval parameters

Reranking logic

Prompt templates

Citation formatting

Change one piece and the whole system often needs retuning. One company told me their RAG migration took 4 months because they had to rediscover all the small optimizations they’d made on the original platform.

Contract levers:

RAG Configuration Disclosure: Machine-readable config for every component

Migration Evaluation Window: 60-90 days of side-by-side access to validate alternatives

The teams that do this well treat RAG portability like infrastructure portability. They document everything and test failover before they need it.

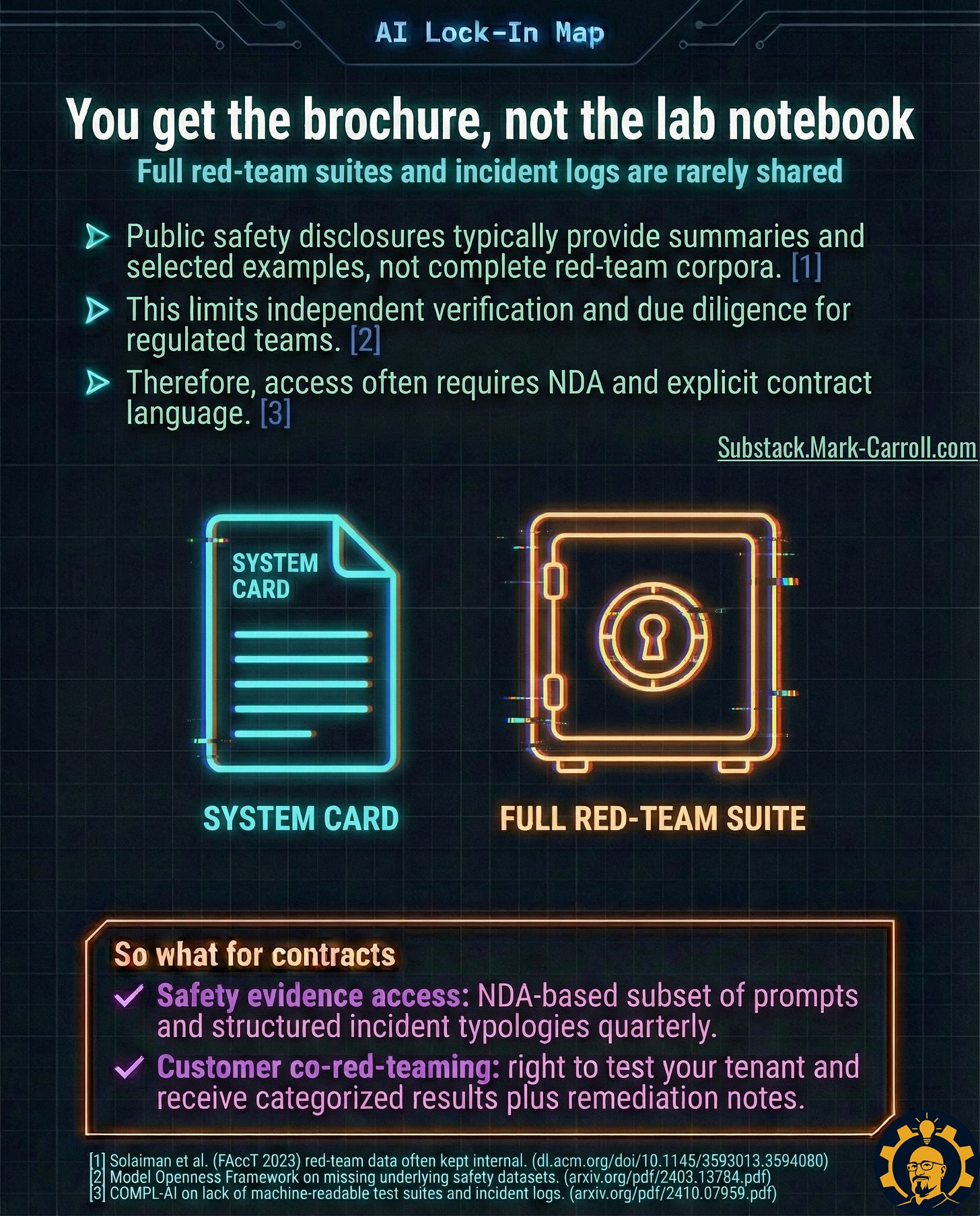

Trap F: Safety evidence you can’t verify

Fascinating insight from an ex-OpenAI enterprise architect:

“Companies would ask us ‘Is this model safe for our use case?’ We’d share marketing materials about our safety process. But the actual red-team datasets, test results, incident logs. That was proprietary. Due diligence was basically trust-based.”

Why this matters: For regulated industries or high-stakes deployments, “trust us” isn’t enough. You need:

Domain-specific safety testing results

Red-team prompt examples

Incident summaries with remediation notes

The ability to run your own safety tests

Contract levers:

Safety Evidence Access (NDA): Get access to relevant safety artifacts

Co-Red-Teaming Rights: Permission to test your deployment with vendor support

Only 2 of the 12 companies I talked to had negotiated this. Both were in healthcare. Everyone else was flying blind on safety.

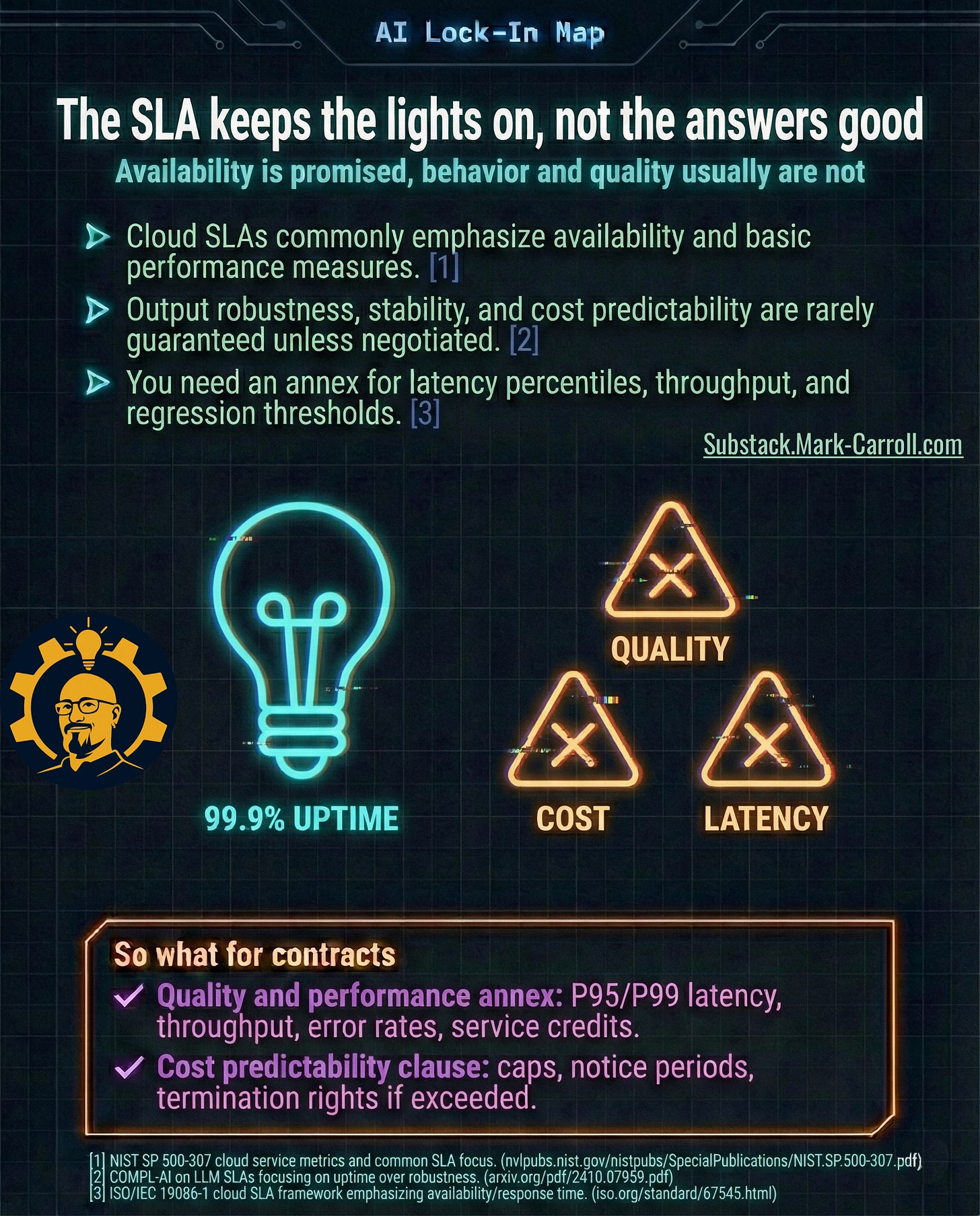

Trap G: SLAs that measure the wrong thing

The pattern I kept hearing: “Our SLA guarantees 99.9% uptime. But there’s nothing about output quality, cost stability, or latency percentiles. When the vendor’s costs went up, so did ours (by 80%). No breach, no recourse.”

Traditional SLAs cover:

Availability (uptime %)

Basic latency (mean response time)

What you actually need:

Quality metrics (accuracy on your benchmarks)

Cost predictability (caps on price increases)

Latency distributions (P95/P99, not just mean)

Throughput guarantees

Refusal rate baselines

Contract levers:

Quality & Performance Annex: Task-specific benchmarks with remedies

Cost Predictability: 90-day notice on pricing, termination rights if costs exceed thresholds

Stripe’s approach here is instructive: they define success metrics upfront and write them into the contract. If the vendor can’t hit the metrics, Stripe can exit penalty-free.

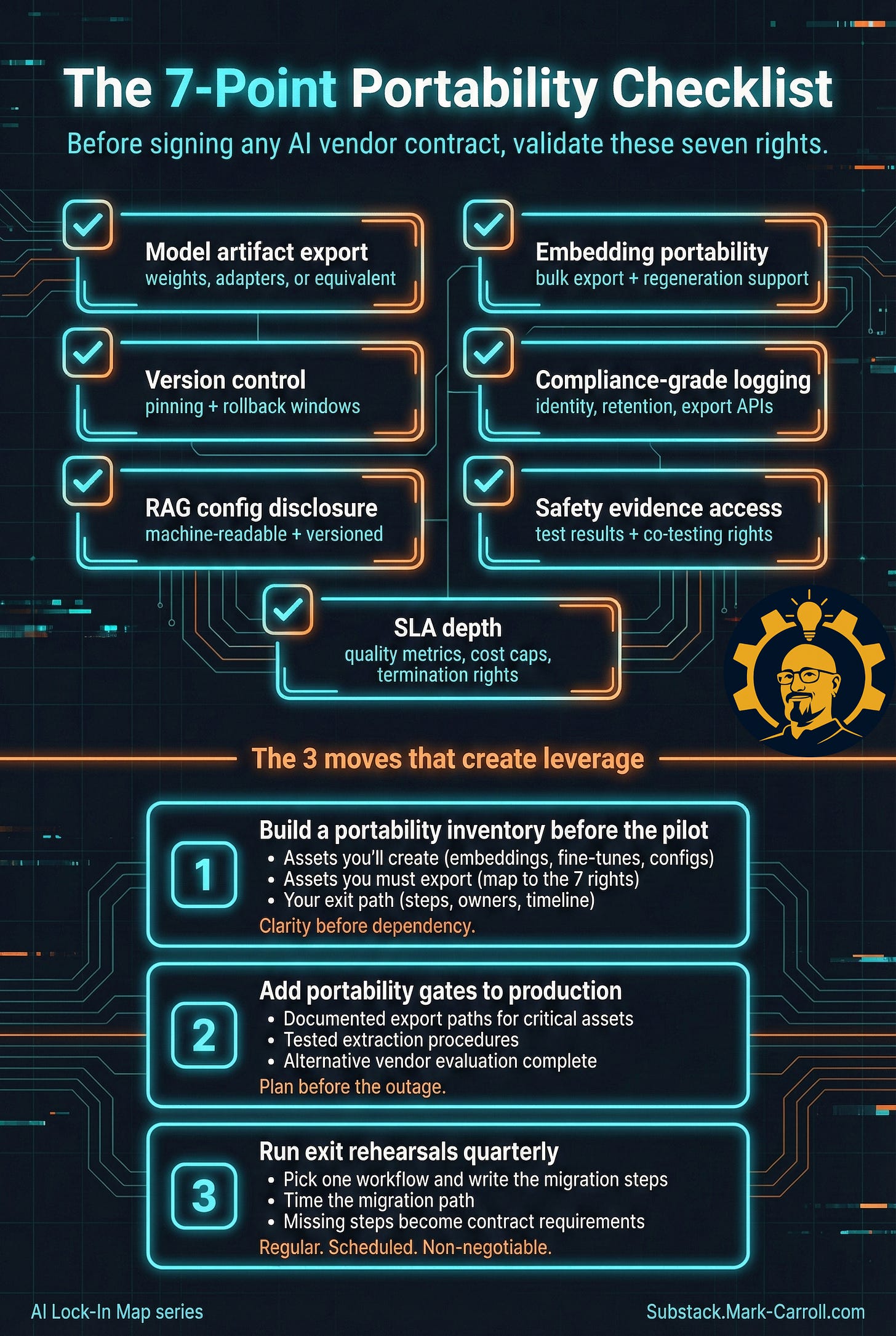

The portability playbook: What actually works

After all these conversations, I distilled the pattern that separates teams who maintain leverage from teams who get locked in.

The 7-Point Portability Checklist

Before signing any AI vendor contract, validate these seven rights:

Model artifact export (weights, adapters, or equivalent)

Embedding portability (bulk export + regeneration support)

Version control (pinning + rollback windows)

Compliance-grade logging (identity, retention, export APIs)

RAG configuration disclosure (machine-readable, versioned)

Safety evidence access (test results, co-testing rights)

SLA depth (quality metrics, cost caps, termination rights)

The 3 moves that create leverage

Move 1: Build a portability inventory before the pilot

Before your first API call, document:

What assets you’ll create (embeddings, fine-tunes, configs)

What assets you need to export (for each of the 7 traps)

What your exit path looks like

One PM told me: “We literally map out the exit plan in the first week. It forces us to understand the dependency before we create it.”

Move 2: Add portability gates to production

No AI system goes live without:

Documented export paths for critical assets

Tested data extraction procedures

Alternative vendor evaluation complete

Think of it like disaster recovery planning. You don’t wait until the disaster to figure out your backup strategy.

Move 3: Run exit rehearsals quarterly

Pick one workflow. Write the steps to migrate it to an alternative vendor. Time how long it takes. Missing steps become contract requirements.

The teams doing this well treat portability like security testing (regular, scheduled, non-negotiable).

The company examples that changed my thinking

Stripe’s approach: From day one, they assume vendor relationships are temporary. Every AI contract includes migration assistance clauses and artifact export rights. They’ve switched embedding providers twice without major disruption because they planned for it.

Notion’s learning: After nearly getting stuck on an embedding vendor, they now maintain export scripts and test them monthly. They can regenerate their entire vector database in 48 hours if needed.

A healthcare AI startup (anonymized): They negotiated co-red-teaming rights in their contract. Every quarter, they run safety tests on their deployment. The vendor has to log, classify, and respond to issues. It’s the only way they could get through FDA conversations with confidence.

The meta-lesson: Lock-in is structural, not technical

Here’s what surprised me most: the AI lock-in problem isn’t really an AI problem.

The same patterns that create dependency in AI existed with cloud providers, SaaS tools, and databases. We solved them with standards, export tools, and better contracts.

The difference with AI is velocity. Teams move fast (”it’s just a pilot”), vendors move fast (weekly model updates), and contracts lag behind (using templates from 2019).

The gap between “prototype” and “production dependency” collapses from months to weeks. Lock-in happens before anyone notices.

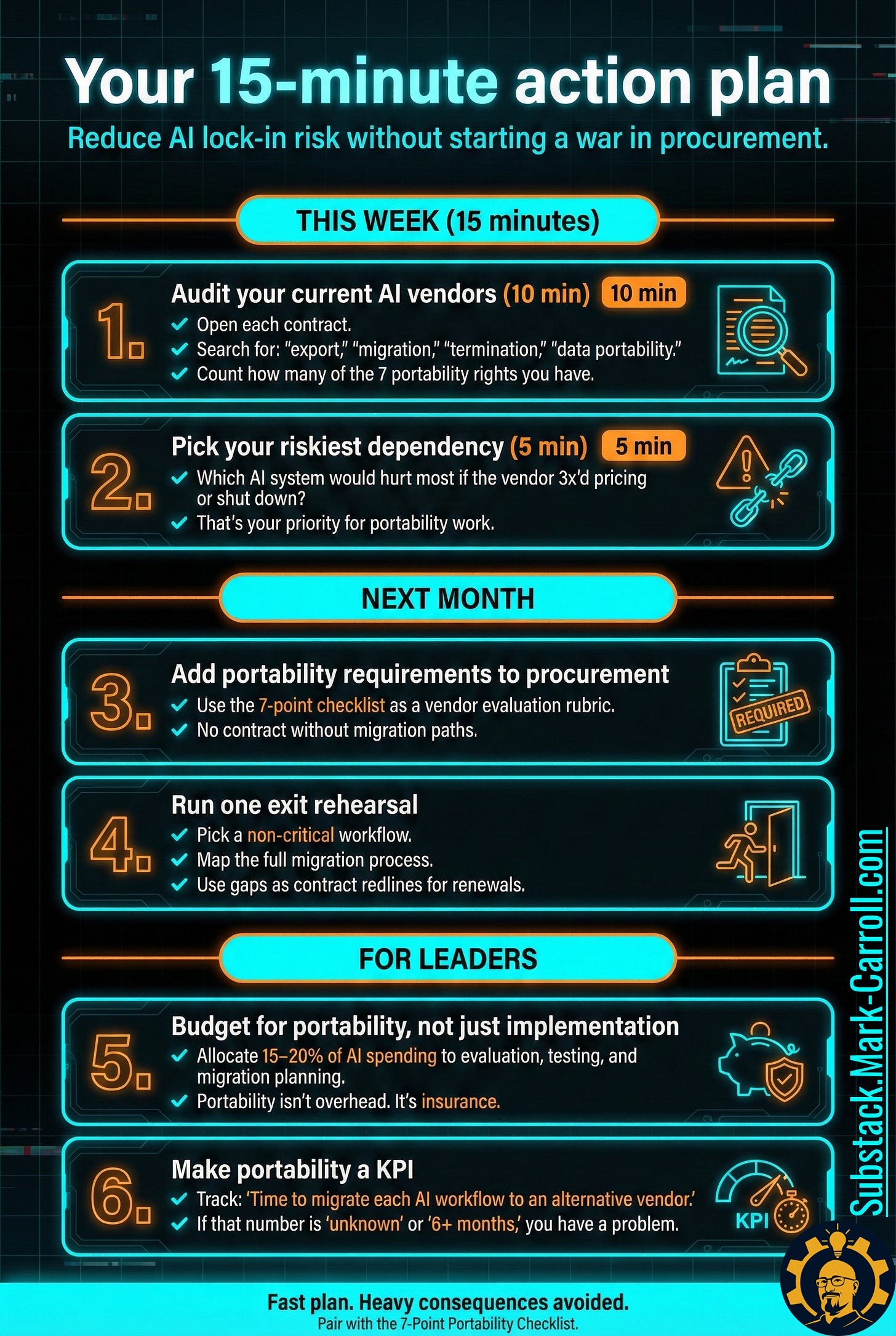

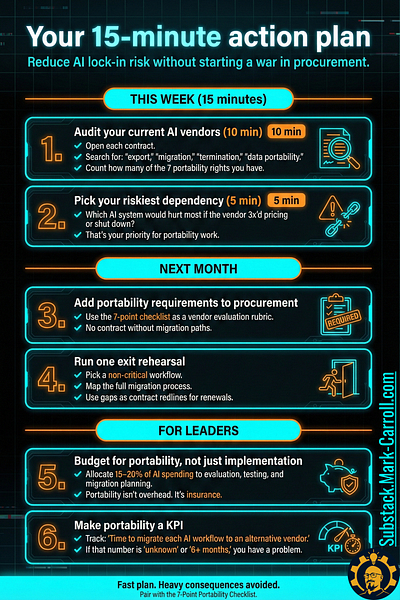

Your 15-minute action plan

This week:

1. Audit your current AI vendors (10 min) Open each contract. Search for: “export,” “migration,” “termination,” “data portability.” Count how many of the 7 portability rights you have.

2. Pick your riskiest dependency (5 min) Which AI system would hurt most if the vendor 3x’d pricing or shut down? That’s your priority for portability work.

Next month:

3. Add portability requirements to procurement Use the 7-point checklist as a vendor evaluation rubric. No contract without migration paths.

4. Run one exit rehearsal Pick a non-critical workflow. Map the full migration process. Use gaps as contract redlines for renewals.

For leaders:

5. Budget for portability, not just implementation Allocate 15-20% of AI spending to evaluation, testing, and migration planning. Portability isn’t overhead. It’s insurance.

6. Make portability a KPI Track: “Time to migrate each AI workflow to an alternative vendor.” If that number is “unknown” or “6+ months,” you have a problem.

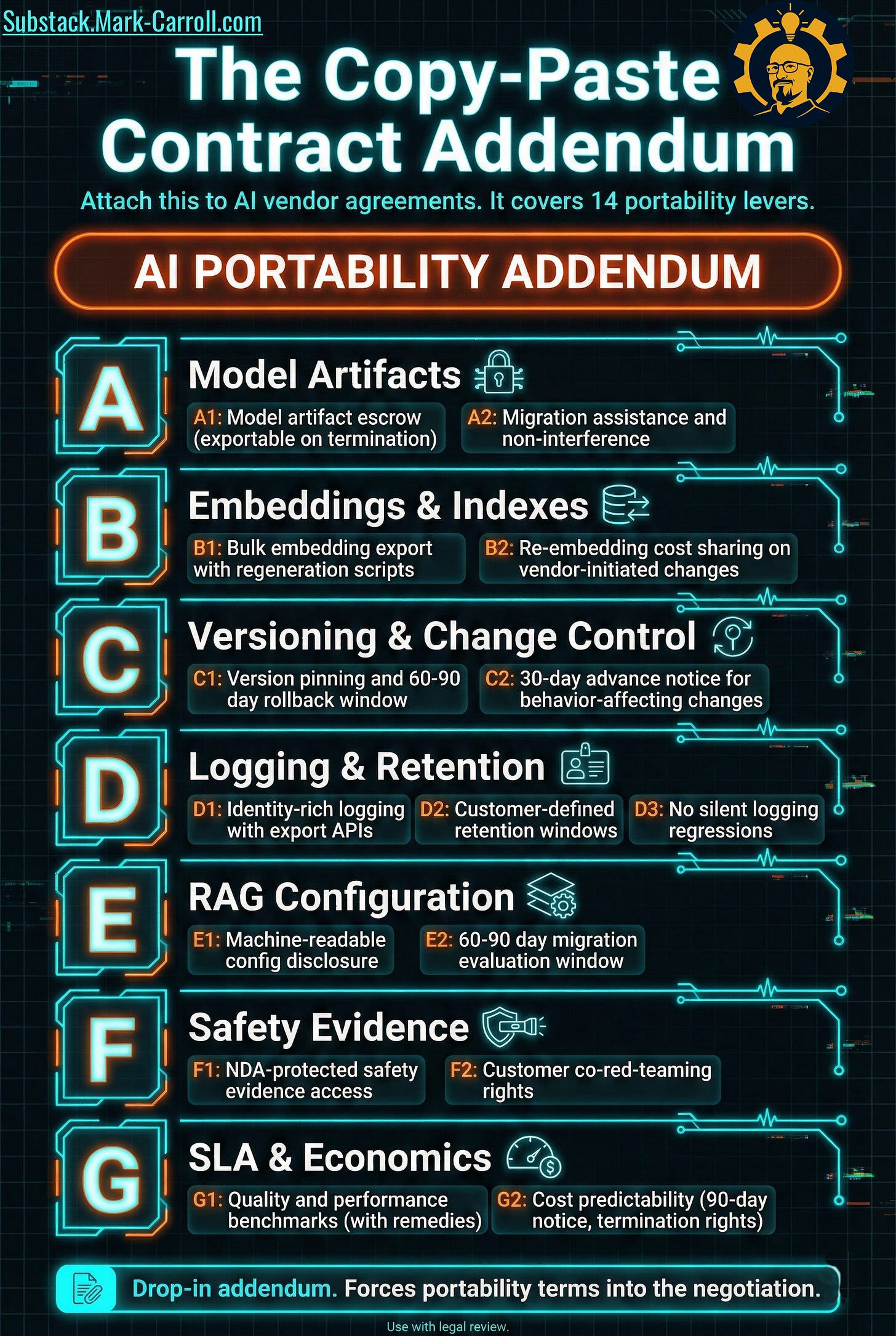

The copy-paste contract addendum

I worked with two procurement teams to create a simple addendum you can attach to AI vendor agreements. It covers the 14 most important levers:

AI PORTABILITY ADDENDUM

A. Model Artifacts

A1: Model artifact escrow (exportable on termination)

A2: Migration assistance and non-interference

B. Embeddings & Indexes

B1: Bulk embedding export with regeneration scripts

B2: Re-embedding cost sharing on vendor-initiated changes

C. Versioning & Change Control

C1: Version pinning and 60-90 day rollback window

C2: 30-day advance notice for behavior-affecting changes

D. Logging & Retention

D1: Identity-rich logging with export APIs

D2: Customer-defined retention windows

D3: No silent logging regressions

E. RAG Configuration

E1: Machine-readable config disclosure

E2: 60-90 day migration evaluation window

F. Safety Evidence

F1: NDA-protected safety evidence access

F2: Customer co-red-teaming rights

G. SLA & Economics

G1: Quality and performance benchmarks (with remedies)

G2: Cost predictability (90-day notice, termination rights)

What I got wrong

When I started this research, I thought open-source models would solve the portability problem. They don’t, at least not completely.

Even with open weights, you still face:

Hosting dependencies (infrastructure, optimization, serving)

Evaluation dependencies (how do you know it’s safe?)

Support dependencies (who do you call when it breaks?)

Open models reduce lock-in, but they don’t eliminate it. You still need good contracts.

The question I’m sitting with

The companies that get this right treat AI portability like they treat infrastructure portability (as a non-negotiable design constraint, not a nice-to-have feature).

But here’s what I keep wondering: Are we asking too much of individual companies?

Every team shouldn’t need to negotiate custom portability clauses. This should be standardized. We need the AI equivalent of GDPR’s data portability requirements or healthcare’s data interoperability mandates.

Until then, leverage lives in contracts.

You now have the map, the checklist, and a 15-minute plan. That is the difference between “we tried an AI pilot” and “we built a system we can actually leave.”

If this series is your threat briefing, my book is the operating manual.

Collaborate Better is about building teams that stay resilient under pressure. The kind that do not become dependent on one vendor, one hero, or one undocumented workflow. It’s the playbook for turning smart intentions into durable execution, without burning trust, time, or people.

Next week in Architects of Autonomy: The Safety Case File.

How to demand real safety evidence, interpret it like an investigator, and spot the difference between a polished system card and a true case file before you bet your org on it.

Quick poll

Where does your team stand on AI portability?

We have contracts with 5+ of the 7 portability rights

We have some portability clauses but not comprehensive

We’ve discussed it but haven’t prioritized it

We haven’t thought about this yet (honestly)

Drop a comment and let me know what I missed. Specifically:

What portability challenges have you hit that I didn’t cover?

What contract clauses have worked for you?

Which vendors are easiest/hardest to negotiate with on this?

Thanks to: The 12 PMs, engineers, and procurement leads who shared their war stories for this piece (NDAs cannot disclose specific names!)

If you found this useful, forward it to your procurement team. They’ll thank you later.

Next: